I spend a lot of time explaining AI terms and acronyms in conversations particularly network related terms, so I decided to create a single reference post with the most common ones. If you’re starting your AI journey or just need a quick refresher, I hope this glossary helps you cut through the jargon and understand the essentials.

Generic AI Terms

Chat GPT (Generative Pre-trained Transformers) a popular Chatbot

Chatbot: A computer program that uses AI to understand and respond to human language in a conversational way. i.e. ChatGPT

AI Agent: Can operate without human interaction , and has a specific objective i.e. book a flight, analise data

Hallucination: When an AI Chatbot generates incorrect or fabricated information but that sounds plausible.

Prompt Engineering: Intelligently crafting inputs to guide LLM responses to more accurately meet your intent, i.e. asking ChatGPT something in such a way that gives you exactly the response you were looking for.

Agentic AI: Advanced form of AI focused on autonomous decision making and goal driven behaviour with limited human intervention. Uses AI agents that can plan, adapt and execute tasks in dynamic environments.

Physical AI: AI that enables machines and robots to perceive, understand and interact with the physical world.

FP – Floating Points i.e. FP4, FP16 up to FP 32 the amount of bits used to store a large number, the more bits the higher the precision but slower, the sweet spot is generally FP16 , still accurate but faster training time. FP8 Superfast, is used in inferencing.

Token: A small piece of text, usually a word or part of a word or punctuation i.e. the phase ‘AI is amazing.’ is 4 ‘tokens’ “AI”, “is”, “amazing”, “.” AI coverts all inputs and outputs to ‘tokens’ the number of ‘Tokens’ determines how much text you can process and how much it costs. Tokens are then converted to ‘Vectors’ to associate a numeric meaning and context to the string of tokens, which are then stored in the vector database for similarity search.

Embedding: A numerical representation of meaning, concept, or intent learned by an AI model.

For example, the words “coffee” and “tea” produce similar embeddings because they have similar semantic meaning.

An embedding is expressed as a vector, a list of numerical values, where each number captures some aspect of the underlying meaning. These vectors are stored in a vector database to enable semantic search and retrieval.

Vector Database: A vector database is a type of database used in AI that stores data as vectors (lists of numbers). Instead of searching for exact matches, it enables similarity search, finding items that are “close” in meaning or features. For example, if you like Product A, the system may suggest Product B because their vectors share several similarities.

Context Window: The maximum number of tokens an LLM can process at once. An example being the ‘memory within a chat window’ of what information you have previously given.

Inferencing: Using a pre-trained AI model to generate predictions or responses based solely on its training data.

Key Point: No external updates; the model relies on internal knowledge.

Analogy: Answering a question from memory.

RAG: Retrieval Augmented Generation.

An AI technique that combines a language model with real-time data retrieval from external sources before generating an answer.

Key Point: Adds fresh, domain-specific context to overcome model knowledge cutoff.

Analogy: Checking a reference book before answering.

LLMs Large language models: AI programs trained on massive datasets to understand and generate human language.

LRM: Large Reasoning Model, A form of LLM that has undergone reasoning focused fine tuning, to handle multi-step reasoning tasks. Instead of just predicting the next word (like traditional LLMs)

Fine Tuning – Is the process of taking a pre‑trained AI model and adjusting it with new, specific data so it performs better on a particular task. Like teaching an AI model that already knows a lot of general stuff to specialise in your subject.

MCP: Model Context Protocol: MCP is a specialised protocol designed for AI models. It standardises how models connect to external tools and data sources, making it easier to provide context and structured results. Unlike APIs, which vary by service, MCP acts as a universal adapter for AI systems, ensuring consistent integration across different environments

MoE: Mixture of Experts: An AI model design where multiple specialised “expert” networks are trained for different tasks or patterns, and a gating mechanism decides which expert(s) to activate for each input. This makes the model more efficient, since only a few experts are used at a time instead of the whole network.

All‑reduce: A communication step used in multi‑GPU training where each GPU shares its results, and they are combined into one final value that every GPU receives. This keeps all GPUs in sync so they update the model the same way during distributed training

Infrastructure , Hardware

CPO: Co-Packaged Optics modules use silicon photonics to integrate optical components directly alongside high-speed electronic chips, like switch ASICs or AI accelerators, within a single package

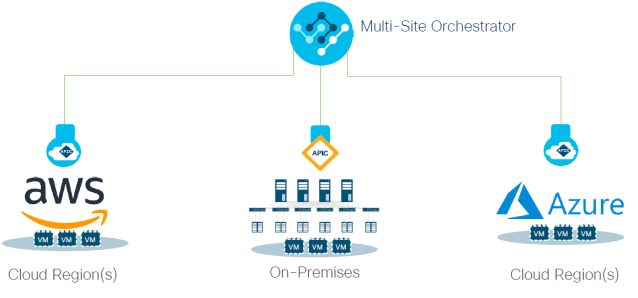

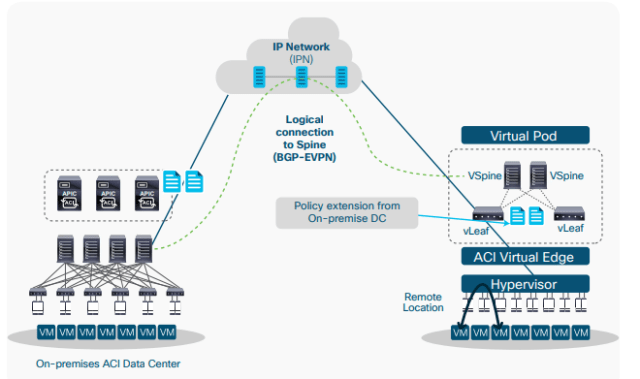

Hyperscaler – Large Cloud Provider i.e. AWS, Azure, GCP

Neoscaler/Neocloud – Typically smaller more agile than a Hyperscaler Emerging providers focused on specialised AI infrastructure at scale i.e CoreWeave, Lambda Labs. Commonly offering GPUaaS offerings.

Offtaker: The downstream client actually booking and paying for the recourses from the GPUaaS provider.

GPU: Graphics Processing Unit is a specialised processor originally designed for rendering graphics, now widely used in AI for its ability to perform thousands of parallel computations simultaneously. This architecture makes GPUs ideal for accelerating deep learning tasks such as training neural networks and running inference, dramatically reducing processing time compared to traditional CPUs

DPU: Data Processing Unit: is a specialised processor designed to offload and accelerate data-centric tasks such as networking, storage, and security from the CPU. DPUs typically combine programmable cores, high-speed network interfaces, and hardware accelerators to handle packet processing, encryption, and virtualisation, improving performance and freeing up CPU resources for application workloads. (e.g., NVIDIA BlueField).

TPU: Tensor Processing Unit, Purpose built chip by Google for AI and ML acceleration, significant speed and efficiency gains over GPU or CPU based systems, but not as flexible.as optimised for a narrower set of operations.

NPU (Native Processing Unit) is an emerging class of processor that uses photonic (light-based) technology instead of traditional electronic signalling to perform computations. By leveraging photons, NPUs achieve ultra-high bandwidth, dramatically lower power consumption, and minimal heat generation, making them ideal for accelerating AI workloads and large-scale data processing.

RDMA (Remote Direct Memory Access): Enables direct memory access between nodes without CPU involvement.

InfiniBand: A high‑performance, low‑latency networking technology widely used in supercomputing and AI training clusters. It supports advanced features such as RDMA (Remote Direct Memory Access) and collective offload, enabling efficient data movement at scale. While InfiniBand is an open standard maintained by the InfiniBand Trade Association (IBTA), in practice the ecosystem is heavily dominated by NVIDIA, following its acquisition of Mellanox in 2020..

ROCEv2 A protocol that enables RDMA over Ethernet using IP headers for routability. It requires lossless / Converged Ethernet (PFC/DCQCN) and is common in HPC and storage networks

Ultra Ethernet: An open standard designed to evolve Ethernet for AI and HPC workloads, focusing on low latency, congestion control, and scalability. It aims to provide an Ethernet-based alternative to InfiniBand for GPU clusters

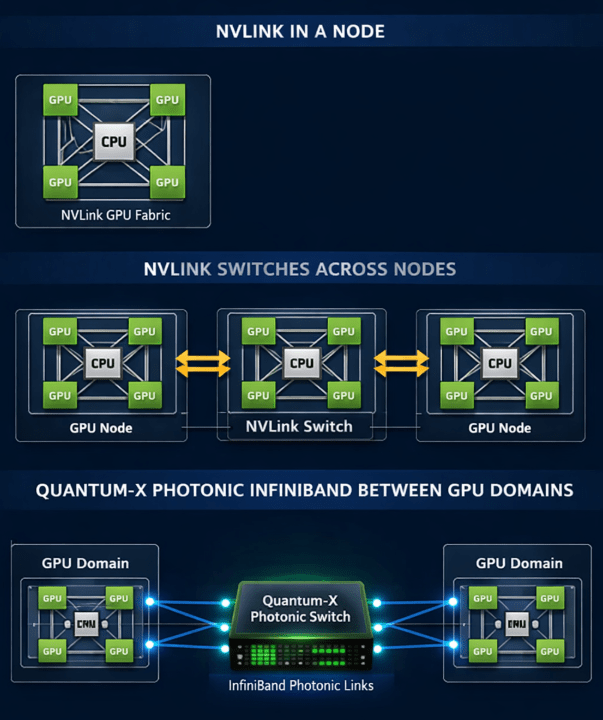

NVLink – NVIDIA’s High-speed GPU interconnect usually within a node

NVSwitch – An advanced switch fabric that connects multiple NVLink-enabled GPUs inside large servers (e.g., DGX systems). Connects up to 72 GPUs (NVIDIAs NL72 rackscale system)

DGX GB200: This system uses the NVIDIA GB200 Superchip, which combines the Grace CPU with Blackwell (B200) GPUs

DGX GB300: This system is based on the enhanced NVIDIA GB300 Superchip, featuring the Grace CPU with the Blackwell Ultra (B300) GPU

NVIDIA NVL4 Board – (GB200 Grace Blackwell ‘SuperChip’ 4 x Blackwell GPUs with 768 GB HBM3E memory, 2 Grace CPUs with 960 GB LPDDR5X memory connected via NVlink to perform like a single unified processor . Designed for hyperscale AI with trillions of parameters.

NVIDIA NVL72 -Essentially 18 NVL4 boards in a single rack connected via NVLink, with 72 Blackwell GPUs, 36 Grace CPUs sharing 13.8 TB of HBM3E and LPDDR5X memory and acting like a single giant GPU pool, definitely more than the sum of it’s parts. Liquid cooled and can draw ~120 kW.

NVIDIA NVL144 – NVIDIA Vera Rubin NVL144 CPX contains 144 Rubin GPUs and 36 Vera CPUs in a single rack providing 8 exaflops of AI performance. provides 7.5x more AI performance than NVIDIA GB300 NVL72 systems: Availability Expected H2 2026

NVIDIA Kyber NVL576 – 576 Rubin Ultra GPUs and 144 Vera CPUs in a single cohesive GPU domain over 2 racks (1 GPU Kyber rack and 1 Kyber side car rack, for power conversion, chillers and monitoring) drawing up to 600kW, provides 15 exaflops at FP4 (Inference) and 5 exaflops at FP8 (Training) Availability Expected H2 2027

NVIDIA BasePOD A reference architecture for building GPU‑accelerated clusters using NVIDIA DGX systems. It provides validated designs for flexible AI and HPC deployments, allowing organisations to choose networking, storage, and supporting infrastructure from different vendors.

NVIDIA SuperPOD A turnkey AI data center solution from NVIDIA, delivering fully integrated GPU clusters at massive scale. It combines DGX systems, high‑speed networking, storage, and NVIDIA software into a complete package for enterprise and research workloads.

Trainium: is a custom AI accelerator ASIC (so even more specialised than a TPU) developed by Amazon Web Services (AWS) to deliver high-performance training and inference for machine learning models at lower power consumption and cost compared to general-purpose GPUs. Designed for deep learning workloads, Trainium offers optimised tensor operations and scalability for large-scale AI training in the cloud.

Smart NIC: is an advanced network interface card that includes onboard processing capabilities, often using programmable CPUs or DPUs, to offload networking, security, and virtualisation tasks from the host CPU. This enables higher performance, lower latency, and improved scalability for data centre and cloud environments by handling functions such as packet processing, encryption, and traffic shaping directly on the NIC.

HBM: (High Bandwidth Memory) dedicated to a GPU, energy efficient, Wide bus up to 1024bits directly on the same package as the GPU minimising latency.

SXM (Server PCI Module) GPU module mounted directly on the motherboard offering superior performance and scalability for large-scale projects like AI training,

PCIe (Peripheral Component Interconnect Express) Standard expansion card inserted into PCIe slots, provides flexibility and wider compatibility, making it suitable for smaller-scale tasks and cost-effectiveness.

CDU – Cooling Distribution Unit is a device used in liquid‑cooled data centers to circulate coolant between the building’s central cooling system (primary loop) and the liquid‑cooled IT equipment (secondary loop). It regulates coolant temperature, pressure, and flow to ensure safe, efficient heat removal from high‑density compute systems. The CDU usually contains the heat exchanger.

Primary cooling loop: This is the ‘Facility level‘ loop, usually operates at hight pressures and volumes serving many racks or an entire data hall. carries facility cooling water (FCW) often mixed with glycol.

Secondary cooling loop: This is the ‘Rack level‘ loop, circulates coolant to absorb heat from GPUs/CPUs and transfer it to the primary loop via a heat exchanger. Coolant from the primary and secondary loops never mix, they are isolated from each other by a heat exchanger.

Coolant: Water (purified/deionized) mixed with glycol (propylene or ethylene) Typical secondary loop fluid is PG25 (25% Propylene glycol, 75% de-ionised water)

Single‑Phase Cooling (Water Cooling): A cooling method where water or treated coolant stays liquid while absorbing heat. The coolant warms up but does not change phase, making it simpler and widely used in today’s liquid‑cooled AI racks.

Two‑Phase Cooling: A cooling method where the coolant boils inside the cold plate, absorbing heat by changing from liquid to vapor. This provides very high heat‑removal efficiency before the coolant condenses back to liquid. A 2 phase coolants boiling point is typically between ~30 °C and 60 °C.

Tooling / Software

NVIDIA Mission Control is an integrated AI‑factory management platform that unifies deployment, monitoring, and orchestration of large‑scale GPU clusters.

It provides a central management plane that automates operations, reduces downtime, and accelerates model development across enterprise AI infrastructure. Built on Kubernetes‑based orchestration, it combines cluster management, workload scheduling, and real‑time system health monitoring for HPC and AI workloads. BCM & NetQ are deployed as part of Mission Control.

Bright Cluster Manager (BCM) is a general-purpose HPC and AI cluster lifecycle management platform, originally developed by Bright Computing (acquired by NVIDIA in 2022), used to provision, configure, and operate multi-vendor CPU and GPU clusters. It handles bare-metal provisioning, node management, monitoring, and integration with schedulers and Kubernetes. Bright is no longer sold as a standalone product and is now delivered as part of NVIDIA’s AI infrastructure stack.

NVIDIA Base Command Manager (BCM) (Not to be confused with Bright above ) is an NVIDIA-specific AI infrastructure management and orchestration layer built on top of Bright Cluster Manager. It adds deep awareness of NVIDIA GPUs, NVLink/NVSwitch topologies, and AI software stacks, and integrates tightly with Kubernetes, the NVIDIA GPU Operator, and NGC. It is designed for operating large-scale NVIDIA AI systems such as DGX, HGX, NVL72, and NVIDIA Cloud Partner (NCP) platforms.

NGC (NVIDIA GPU Cloud) is NVIDIA’s curated catalog of GPU-optimized software, including prebuilt AI and HPC containers, models, libraries, and deployment packages. It enables clusters to run NVIDIA-validated workloads efficiently and reproducibly, and integrates with Base Command Manager for automated deployment on GPU-accelerated systems

NCP (NVIDIA Cloud Partner): An NCP is a service provider approved by NVIDIA to deliver GPU‑accelerated cloud services using a standardised, high‑performance NCP reference architecture.

Slurm: (Simple Linux Utility for Resource Management)

An open‑source workload manager that schedules and runs jobs on clusters of computers. It’s widely used in high‑performance computing (HPC) and AI to allocate resources, manage queues, and coordinate parallel tasks across many nodes.

NVIDIA NetQ is a scalable network‑operations toolset that provides real‑time visibility, troubleshooting, and validation for Cumulus and SONiC‑based data center fabrics.

It collects telemetry from switches and hosts to deliver actionable insights into network health, helping operators quickly detect issues and maintain smooth AI‑fabric operations

NMX‑M (NMX Manager) is NVIDIA’s management tool for monitoring the NVLink/NVSwitch fabric. It collects telemetry from the GPU interconnect, shows health and performance information, and helps operators understand and manage the fabric. It runs as part of the NVIDIA Mission Control software stack.

NCCL (NVIDIA Collective Communication Library, pronounced “Nickel”) — A library developed by NVIDIA that enables fast, efficient communication between GPUs. It provides optimised routines for collective operations such as all‑reduce, broadcast, and gather, making multi‑GPU and multi‑node training in AI and HPC scalable and performant. NCCL runs over NVLink/NVSwitch or PCIe within a node, and InfiniBand or RoCEv2 between nodes, using CUDA under the hood, thus relies on having a compatible CUDA version.

CUDA (Compute Unified Device Architecture): A software platform developed by NVIDIA that enables programs to run thousands of tasks in parallel on GPUs, unlocking massive speedups for computing beyond graphics

MIG (Multi‑Instance GPU) allows a single NVIDIA GPU to be split into seven isolated GPU instances, each with its own dedicated compute cores, memory, and cache. This lets multiple users or workloads run securely and independently on the same physical GPU, improving utilisation and efficiency

Hugging Face: A popular open‑source platform where developers share, build, and deploy AI models and datasets. It provides tools like the Transformers library and hosts millions of pre‑trained models that make it easy to develop AI applications. Think of it like an ‘AI App Store’

Useful Troubleshooting Commands

nvidia‑smi (NVIDIA System Management Interface): A command‑line tool that monitors and manages NVIDIA GPUs, providing real‑time details such as utilization, temperature, memory usage, and running processes. It is built on the NVIDIA Management Library (NVML) and allows administrators to query or modify GPU state on supported NVIDIA hardware. It’s the primary command for GPU debugging.

nvidia-smi topo -m – Displays GPU‑to‑GPU, GPU‑to‑NIC, and interconnect topology including PCIe, NVLink, and NVSwitch distances. Use it to diagnose suboptimal topology or performance issues.

lspci – Shows all PCI devices on a system. Use it to verify that GPUs, NICs (Ethernet/InfiniBand), NVSwitch cards, or DPUs are present and enumerated correctly by the OS.

ethtool – Provides detailed information and configuration options for Ethernet interfaces. Use it to verify link speed, duplex, driver, firmware, and RDMA/RoCE NIC‑related offload settings.

DCGM (Data Center GPU Manager) is NVIDIA’s toolkit for monitoring, managing, and diagnosing GPU health and performance in datacenter environments. It provides telemetry, fault detection, NVLINK statistics, and management APIs to keep large GPU clusters stable and efficient.

DCGMI (Data Center GPU Manager Interface)

dcgmi is the command-line interface for NVIDIA DCGM, used by administrators and automation tools to query, configure, and troubleshoot GPUs.

It allows operators to check GPU health, run diagnostics, view utilisation and errors, and integrate GPU monitoring into scripts and operational workflows.

ipmitool sensor: is a command that reads hardware sensor data exposed through IPMI on a server’s BMC. It displays metrics such as temperatures, voltages, fan speeds, and power readings. It’s commonly used for quick health checks and troubleshooting hardware issues on remote systems

Infiniband / RoCE / RDMA

ibstat – is Displays InfiniBand HCA (Host Channel Adapter) link status. Use it when checking whether IB interfaces are up, active, and negotiating the expected speed.

ibv_devinfo -Shows details about RDMA devices (HCAs), including capabilities, supported link types, and active ports. Use it to verify RDMA stack readiness.

rdma link / rdma dev (rdma-core)

rdma link / rdma dev (rdma-core) – Shows RDMA interface state, GIDs, RoCE modes. Helpful when debugging RoCEv2 mis‑configs or link negotiation

ibping – Tests basic InfiniBand connectivity and fabric reachability.

perfquery / ibqueryerrors – Reads IB port counters to diagnose congestion, packet loss, or fabric errors.

Ethernet / System-level Commands

ip link / ip addr -Shows interface states and addresses. Use it to confirm NICs are up and configured correctly.

ss -tuna – Displays sockets and connections—helpful for verifying GPU jobs aren’t stuck waiting on network flows.

dmesg – Shows kernel logs, often revealing driver issues, PCIe link retraining errors, or GPU resets.

Storage / RoCE Commands

nvme list – Checks attached NVMe drives, often important for local scratch performance on GPU servers.

weka status (if using WekaIO) – Displays cluster and client status when debugging storage throughput issues.

Think I’ve missed any important ones?, let me know in the comments.