In the UCS world where a virtual NIC on a virtual server is connected to a virtual port on a virtual switch by a virtual cable, it is not surprising that there can be confusion about what path packets are actually taking through the UCS infrastructure.

Similarly knowing the full data path through the UCS infrastructure is essential to understanding troubleshooting and testing failover.

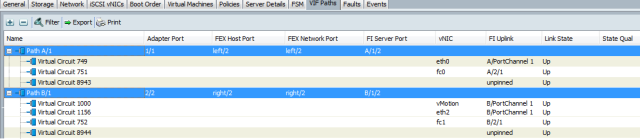

I’m sure you have all seen the table below in UCS Manager where it details the path that each virtual NIC or HBA takes through the infrastructure. But what do all these values mean? And where are they in the infrastructure? That is the objective of this post.

I will also detail the relevant CLI commands to confirm, and troubleshoot the complete VIF (Virtual Interface) Path.

If you have ever seen and understood the film “Inception” you should have no problem understanding Cisco UCS, where virtual machines are run on virtual hosts which run on virtual infrastructure and abstracted hardware 🙂 but in all seriousness it’s really not that complicated.

The diagram below shows a Half width blade with a vNIC called eth0 created on a Cisco VIC (M81KR) with its primary path mapped to Fabric A. For simplicity only one IO Module to Fabric Interconnect link is shown in the diagram, as well as only one of the Host Interfaces (HIFs / Server facing ports) on the IO module. In this post I will focus in on eth0 which is assigned virtual circuit 749.

Virtual Circuit

First column in figure 1, Virtual Circuit number, this is a unique value assigned to the virtual circuit which comprises the virtual NIC, the virtual cable (red dotted line in figure 2) and the virtual switch port. The virtual switch port and virtual circuit have the same identifier in this case 749.

If you do not know which virtual circuit will be used for the particular MAC address you are interested in, or which Chassis and Server that virtual circuit resides on, you can use the below commands to find out.

The above output shows that the MAC address is behind Veth749, now in order to find out which Chassis and Server is using Veth749 issue the below command.

The Interface to which Veth749 is bound to is Ethernet 1/1/2 which equates to Chassis1 Server 2 (you can ignore the middle value) the description field also confirms the location and virtual interface name on the server (eth0)

As you know, (having read my blog post on “Adapter FEX”:-) ) the M81KR “PALO” adapter is actually a mezzanine fabric extender just like the IO Module (FEX) in the Chassis. What this means is when I create a virtual interface on the adapter, that interface is actually created and appears as a local interface on the Fabric Interconnect (FI), whether it’s a vNIC which appears as a Veth port on the FI or a vHBA which appears as a Vfc interface.

This means we will have many virtual circuits or “virtual cables” going down the physical cable, Cisco UCS obviously needs to be able to differentiate between all these “virtual cables”, and it does so by attaching a Virtual Network TAG (VN-TAG) to each virtual circuit. This way the Cisco UCS can track and switch packets between virtual circuits, even if both of those virtual circuits are using the same physical cable, which the laws of Ethernet would not normally allow.

Adapter Port

The Cisco VIC (M81KR) has two physical 10Gbs traces (paths / ports) one trace to Fabric A and one trace to Fabric B. This is how the VIC can provide hardware fabric failover and fabric load balancing to its virtual interfaces.These adapter ports are listed as 1/1 to Fabric A and 2/2 to Fabric B.

In the case of a Full Width blade, which can take 2 Mezzanine adapters this obviously doubles the number of paths to four.

In the case of the VIC 1240 and VIC 1280 which have 20Gbs and 40Gbs to each fabric respectively there is still only a single logical path to each fabric as the links are hardware port channels 2x10Gbs per fabric for the VIC 1240 and 4x10Gbs per fabric in the case of the VIC 1280.

The new M3 servers which have LAN on board (mLOM) provide additional on board paths.

Fex Host port

In the lab setup I am using, the FEX modules are 2104XP’s which have 8 internal Server facing ports (Sometimes referred to as Host Interfaces (HIFs)),which connect to the Blade slots, port 1 to blade slot 1, port 2 to blade slot 2 and so on

Fex Network Port

The 2104XP IO Modules also have 4 Network Interfaces (NIFs / Fex Uplinks) which connect to its upstream Fabric Interconnect.

Fabric Interconnect

The 2 FI interfaces listed in Figure 1 are FI Server Port and FI Uplink

The server facing ports on the Fabric Interconnect are called FI Server Ports and can be confirmed in the output of the “Show Interface fex-fabric” command. The FI server ports are listed in the second column “Fabric Port”. In figure 5

The FI Uplink interface can be found by checking the pinning of the Veth interface.

As can be seen from the above figure, Veth749 is pinned to FI Uplink (Border Interface) Port-Channel 1

Armed with all the above you should now have the information necessary to understand the packet flow within the UCS and be able to troubleshoot as well as monitor and understand failover.

Hope this helps.

GREAT article. Thanks for taking the time to write this out. The New Cisco UCS 2204XP 2208XP Fabric Extender = 40 and 80 Gbps and Real Port-Channel’s to FI!

Most straight forward explination of VN-TAG ive seen for a while, nice one.

Excellent article. It cleared a lot of things up for me. One question though, you say that you can ignore the middle number of the bound interface, i have a server with a bound interface of eth 2/1/2, but the server is a full width B250 with two CNA cards and is listed as being in chassis 2 slot 1. Why is this? Does the slot 2 refer to the second CNA card?

Hi Anthony

Glad you found the post useful, and you are absolutley right. The B250 being a full width blade takes two blade slots i.e. if it is in slot 1, it takes up and uses the mid-plane traces of both slots 1 and 2. As such the server will always be reported as being in slot 1, but will have Mezzanine IDs for both slots 1 and 2. So in the example you mention a B250 in slot 1 of Chassis 2, will have 2 possible bound eths 2/1/1 and 2/1/2 each meaning a differen Mez Card. Hope that makes sense.

Colin

Things are even more easy to understand now with the 62xx fabric coupled with 22xx Io module where port channelling can be done in between those 2 also.

Hi, if we have a look at the POs in the UCS. How do we explain what are Po1292 – 1310?

I believe they are the FEX-host ports, the internal facing ports that connect to the blade servers.

But how to figure those numbers out?

pod-A(nxos)# sh port-ch su

——————————————————————————–

Group Port- Type Protocol Member Ports

Channel

——————————————————————————–

9 Po9(SU) Eth LACP Eth1/1(P) Eth1/2(P) Eth1/3(P)

Eth1/4(P)

1025 Po1025(SU) Eth NONE Eth1/5(P) Eth1/6(P) Eth1/7(P)

Eth1/8(P)

1026 Po1026(SU) Eth NONE Eth1/9(P) Eth1/10(P) Eth1/11(P)

Eth1/12(P)

1027 Po1027(SU) Eth NONE Eth1/13(P) Eth1/14(P) Eth1/15(P)

Eth1/16(P)

1292 Po1292(SD) Eth NONE Eth1/1/13(D) Eth1/1/15(D)

1296 Po1296(SU) Eth NONE Eth2/1/17(P) Eth2/1/19(P)

1298 Po1298(SD) Eth NONE Eth3/1/13(D) Eth3/1/15(D)

1303 Po1303(SU) Eth NONE Eth3/1/17(P) Eth3/1/19(P)

1305 Po1305(SD) Eth NONE Eth1/1/21(D) Eth1/1/23(D)

1306 Po1306(SD) Eth NONE Eth3/1/21(D) Eth3/1/23(D)

1308 Po1308(SD) Eth NONE Eth3/1/25(D) Eth3/1/27(D)

1310 Po1310(SD) Eth NONE Eth1/1/9(D) Eth1/1/11(D)

Hi Sandev

If using VIC1240 or VIC1280’s then there are mandatory hardware port channels for all the ports of those cards that are in the same port-port group (fabric) that’s why you see a port-channel Id as the adapter port in the VIF paths tab.

You can see this by connecting into the NXOS element of the FI and you can do. “Sh port-channel summary” and you will see you port-channels I.e Po1292 and the member ports will be the Host interfaces (HIFs) on the IOM that map to that blade slot.

Regards

Colin

Hi, i just have one question. Today we have upgraded our system with 2208 extenders instead of old (2104xp)

We have 2 old b200m2 with m81kr adapter and we have added three new b200m3 servers with 1240 plus expansion (8 ports per server).

2 cables are uplinking from expanders to each interconnect. All is working, but we have warning on 2208 expanders (unsuported connection). Also i noticed on expanders 20 ports toward servers (i guess system assumes 5 servers with 4 ports for each expander, even b200m2 have only 1 port toward expanders), and i guess it should be 3×4+2×1=14.

Any experience with mixing old servers with m81kr and new 1240 adapters?

Also 2208 has 8 uplinks grouped as 2×4 ports. I was wondering if i should connect 1st and 5th port instead first two (i cant find detailed 2208 connection scheme anywhere)…

Or should i just reacknowledge chassis 🙂 Right now discovery policy is set to 2 link.

Thanks

Hi Igor

You are right that each 2208XP will provide 4 x 10Gb Host Interfaces (HIFs) to the 4 (KR Ports) to each blade slot (2 to the VIC1240 and 2 to the Mez Slot) (see previous post on this here

So to take your example a 2208 has 32 HIFs (Backplane Ports, if viewed in UCSM) the first 4 map to Slot 1 (ports 1 + 3 to the mLOM and ports 2 and 4 to the Mez Slot) so your M3 Blades with the Port-Expander will light up all four of these Blackplane ports.

To confirm (And using the example of a Blade in Slot 1) if you go to the Equipment tab>Chassis1>IO Module 1 and expand “Backplane Ports” you should see Backplane ports 1/1, 1/2, 1/3, 1/4 as all being up, and have a reported peer of sys/chassis-1/blade-1/adaptor-x/ext-eth-x

If the Blade in Slot 1 happened to be an M1 or M2 Blade i.e No mLOM and had a M81KR Mez Card, then only the first Blackplane port to that blade slot would even show up i.e you would only see Backplane port 1/1.

I have lots of clients that run a Mix of Gen 1 and Gen 2 kit as well as M1, M2 and M3 Servers and have never had an issue, you just need to understand what each combination gives you bandwidth wise.

Re your FEX to FI Uplinks, as far as I know there is no recomendation as how to connect the ports of a 2208 (I generally just connect them top down)

And lastly you are right that a Chassis Re-Ack is required if the connectivity between the FEX and the FI Changes or differs from your Chassis Discovery Policy.

Regards

Colin

Colin, thanks a lot. Chassis Re-ack solved warning log 🙂

This is good stuff ,,, Question !!! how can you tell what path the host is taking,,, adapter A or B or the load balancing hast ( when and Why )

Hi Bill

You would have mapped your vHBA / vNIC to a fabric A/B on creation, if you meant something different please fire back.

Regards

Colin

Hey ,,,they are connected to both A&B so I wanted to know who makes Data path decisions if VMware is involved ?

Ahh I get you, if you have 1 vNIC mapped to Fabric A and another mapped to Fabic B and you Team them in VMware, then VMware would load balance across the 2 using the selected algorthym (Port-Group is recomended, to keep VM adapters on the same fabric to prevent upstream LAN switches seeing that VM MAC on both fabrics)

If you are using a VIC1240/1280 then you will have some sub load balancing going on handled by the Cisco VIC as the VIC1240 and 1280 has 2 and 4 10Gb Hardware port channels per Fabric.

So first decision would be made my VMware i.e. to use Adapter A or B. Then the Cisco VIC would futher load balance that traffic across the hardware port-channel between the VIC and the FEX.

Regards

Colin

Thanks Colin!

Got another question … I need a way to veiw VM mappings (1to1) to uplinks on the N1k (dvs) .. I can see the port map from the VM to the Veth on the 1K but not going north towards the FI’s .

Hello, we have just implemented UCS, issue encountering is we are able to ping hosts from ucs to a physical server outside of the ucs environment …. subsequently, not able to ping from a physical host outside of UCS to a server within UCS ? note the servers within the UCS environment and outside of the UCS environment both carry the same vlan

any feedback if others have experienced this and have a resolution would be greatly appreciated.

Hi assuming there is no host firewalls preventing ICMP or something, I would confirm that the correct VLANs are on the correct vNICs and that your Native VLAN is consistent within the UCS and upstream network.

I would see at what point my PINGs stop/start working southbound I.e put an IP (svi) on the desired VLAN of the first upstream switch that the UCS FI’s uplink to and see if you can PING the UCS servers. If not you know your issue is between the upstream switch and the FI’s so then check UCS uplink configs etc… You could also check ARP and MAC tables from the outside host then work your way to the UCS host and see where the issue is.

Post back how you get on.

Regards

Colin

or disable firewall on servers within ucs 🙂

Hello FJM, I’d suggest you take a look at the Windows Firewall as well as domain firewall.

Dear Colin,

In your figure 4, you mentioned the bound interface eth1/1/2. However, I think it actually means it’s the Fex 1, module 1 and HIF 2, not the one you specified. In my UCS output, it reads like this:-

myucs(nxos)# sh int veth966

Vethernet966 is up

Bound Interface is Ethernet1/1/7

Hardware: Virtual, address: 0005.73f9.94c0 (bia 0005.73f9.94c0)

Description: server 1/4, VNIC vNIC-IDY-P-B

Encapsulation ARPA

Port mode is trunk

EtherType is 0x8100

Rx

0 unicast packets 0 multicast packets 0 broadcast packets

0 input packets 0 bytes

0 input packet drops

Tx

0 unicast packets 0 multicast packets 0 broadcast packets

0 output packets 0 bytes

0 flood packets

0 output packet drops

Hi James

Thanks for the comment.

Things have moved on a little since this post, which was written when only Gen 1 FEX’s were available and the the Breakdown of the bound interface was read Chassis No./Remote Entity/Slot No

With Gen 1 FEX’s the slot number always matched the HIF port number as it is a 1 to 1 mapping i.e Blade 1 used HIF 1 and so on. So the last digit of the bound interface was always referenced as the Blade Slot number, as this made more sense to customers than HIF Port.

Gen 2 FEX’s as you know have multiple HIFs per slot, the 2204XP has 2 HIFs per Blade and the 2208XP which has 4 HIFs per blade. So the last digit no longer should be considered the Slot number but the HIF port as you rightly mention.

Regards

Colin

Pingback: Cisco UCS Port-Channeling | Keeping It Classless

Pingback: Understanding Cisco UCS VIF Paths (The Return) | UCSguru.com

Pingback: Under the Cisco UCS Kimono | Hullopanda

Hi Colin,

Great post. Now I feel more confident about UCS. Which documents or manuals do we need to refer to such detailed information about other aspects? Did you gain these insights by hands on approach or a mix of reading relevant documentation and hands on experience?

Hello Colin,

Thank U for gr8 article, but I have a remark:

there is one chassis in our lab with 7 blades each with 1 CNA (either with M81KR or 1240). Chassis equipped with 2208XP IOMs.

Now when I look at veth (1156 f.e.) I can see that bound interface doesn’t correspond described above mapping of last digit to be a server # (which is server 3 in fact):

UCS-FI-B(nxos)# sho interfa vethernet 1156

Vethernet1156 is up

Bound Interface is Ethernet1/1/9

Port description is server 1/3, VNIC eth3

Rather it shows (as I can understand from Servers/VIF Paths) “FEX Host port” (which correspond to IOM’s backplane port 1/9 as I can imagine looking at Equipment/IOM/Backplane ports). And it seems to be specific to UCSM ver of 2.2(1c).

Also I cannot see “sho pinn” command in nxos mode of this version.

Hi Andrew

Previously with Gen 1 kit, there was only ever 1 to 1 mapping of Host Interface FEX ports (HIFs) to Blade slots. i.e HIF 1 to Blade 1, HIF 2 to Blade 2 etc..

With Gen 2 FEX’s there are now numerous HIFs to Blade slots (2 per slot for the 2204XP and 4 per slot for the 2208XP) so a 2208XP has 4 HIFs (FEX host ports 1-4) to Blade 1, 4 HIFs (FEX host ports 5-8) to Blade 2 and 4 HIFs (FEX host ports 9-12) to Blade 3 etc..

With an adpater that only has a single 10GB Trace (Like your M81KR) these will connect through to the first HIF allocated to the Blade which is why you are seeing Blade 3 connected to Ethernet 1/1/9

I did an update to this post that details this below:

Regards

Colin

Hi Colin,

many 10x 2 U 4 explanation.

Would you happen to know what the VN tagged traffic look like? Will it be possible for your to capture a PCAP with the VN tag?

Hi Morgan

You won’t see the VNTAG in a packet capture and it is only between the Adapter/FEX and the parent switch (FI/Nexus) and it put on and taken off in hardware.

But if you really want to see the packet format etc.. have a look at the below submission to ieee. Original submission was under 802.1qbh which has since been superseded by 802.1BR

Click to access new-pelissier-vntag-seminar-0508.pdf

Regards

Colin

What a great article, thank you very much for such a good explanation!

Hi

Is there a way to reduce the speed of Virtual Interfaces , For example vNIC presented for management to an ESX Host ?

Also I notice that some interfaces show a 20 GB link when others are 10 GB Link.. Any idea ?

Hi KP

Yes you can rate-limit any vNIC via a QoS policy.

The vNICs reported speed comes from the number of Traces (KR-Ports) that are lit up between the Mez card and the IO Module These speeds can be 10,20 or 40 GB depending on your combination of HW.

(see my post on 20GB + 20GB = 10GB, M3 IO Explained)

Regards

Colin

Hi Colin,

That was a wonderful post though I have a question I just want to get clear on VIF, VIF path, Virtual Circuit, VN-link. If you can differentiate them for me.

VIF is the Virtual interface created within the FI and is assigned an arbitury number i.e vEth7 789 / vFC 789

Virtual Network (VN)Link: The logical point to point connection between the vNIC/vHBA and vEth/vFC

Virtual Circuit means the same as VN-Link

VIF Path is the path through the physical infrastructure the Virtual Link takes

I would suggest you also see my post on “Under the UCS Kimono” as I go into alot more detail.

Regards

Colin

Thanks guru that was cool, it cleared so many doubt

Thanks &Regards

Ashish

Pingback: Traffic Load Balancing in Cisco UCS | Niktips's Blog

hi,

what i’m observing in my ucs half width blade, ether and fc vif is going down frequently with the reason of “bound physical interface down”. outputs are pasted below when it goes down.

FI-B(nxos)# sh int Veth790

Vethernet790 is down (BoundIfDown)

Bound Interface is —

Hardware: Virtual, address: 002a.6a30.d460 (bia 002a.6a30.d460)

Description: server 1/5, VNIC eth1

FI-B(nxos)# sh int Veth8984

Vethernet8984 is down (nonPartcipating)

Bound Interface is —

Hardware: Virtual, address: 002a.6a30.d460 (bia 002a.6a30.d460)

Description: server 1/5, VHBA fc1

Encapsulation ARPA

Port mode is access

EtherType is 0x8100

FI-B(nxos)# sh interface Ethernet1/1/9

Ethernet1/1/9 is down (Link not connected)

Hardware: 10000 Ethernet, address: 10f3.11e0.afa0 (bia 10f3.11e0.afa0)

i de-commissioned/re-commissioned the blade, disassociate and associate SP again but no avail. can you please help me out why this ethernet1/1/9 is going down. Thanks

That would be an issue with the FC Interface that the vHBA is pinned to, check the link between the FI and the SAN, look for errors/drops/flaps on the FI and SAN interfaces. maybe a setting mismatch, dodgey sfp or cable.

Regards

Colin

I ran across this while looking up supplemental documentation on VIF. This page does a superb job of breaking out the ports and useful CLI commands for path tracing. Thanks again.

Really very useful information and appreciate your patience in explaining every queries. Thanks Colin.

Pingback: Cisco UCS FC uplinks to Brocade Fabric – Real World UCS